Objectives

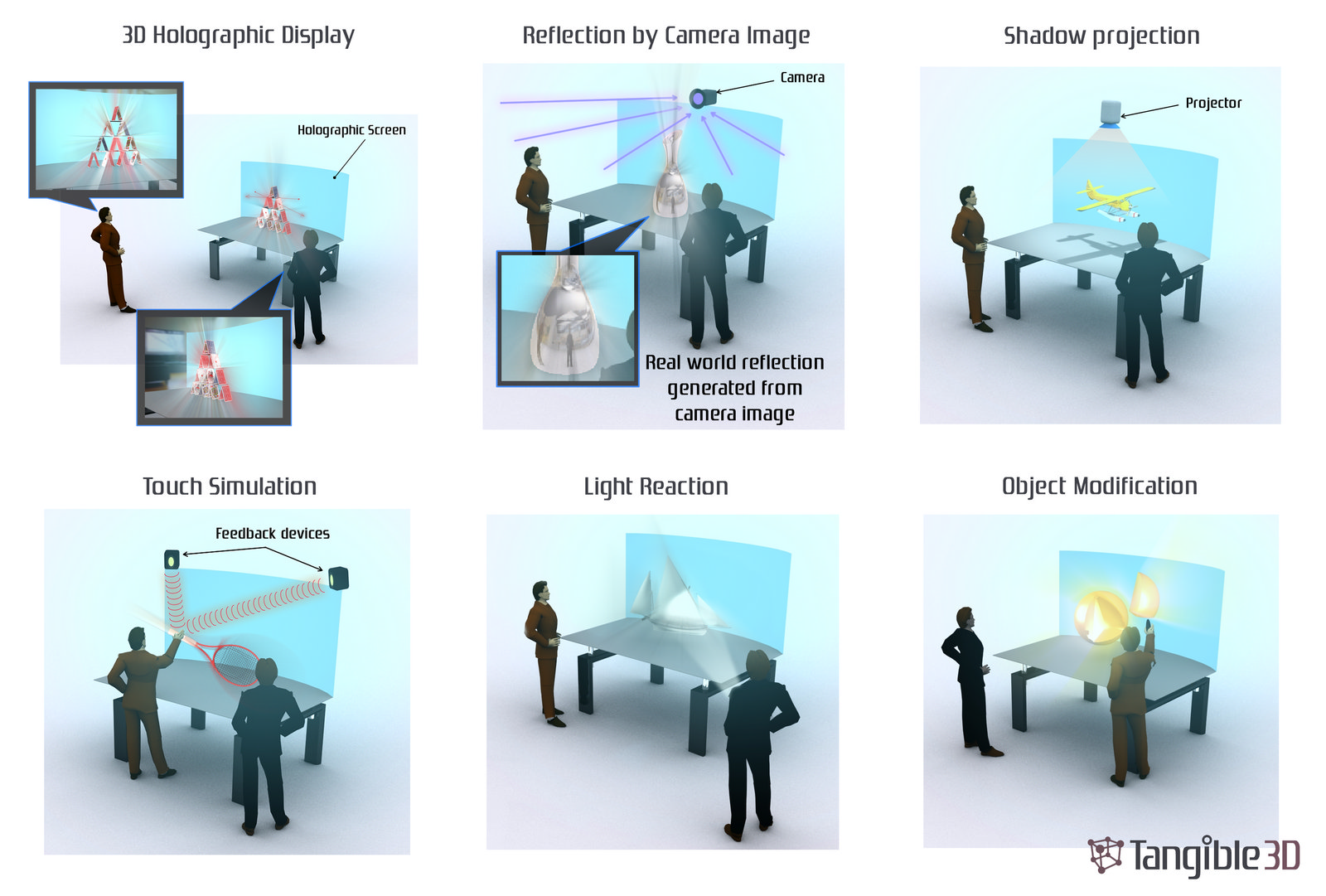

The Tangible3D project will create the first interactive tactile true-3D displays that integrate the complete light field 3D space, with all parts of the scene directly available for interaction (from Holografika) with a non-contact tactile system that allows users to feel the objects in mid-air (from Ultrahaptics). The 3D display system will allow users to reach into the viewing volume to touch virtual objects, and even feel gradients of haptic feedback as the hands penetrate into virtual objects.

Current media consumers and users expect to be able to control and manipulate digital media content. Users will expect to be able to dexterously interact with the digital content as with real world physical objects, manipulate them and experience a feeling of natural touch. Moreover, the user would operate and reshape the objects in natural manner. This is already evident in how today's users expect to use gestures to interact with desktops and other interactive devices. Leapmotion (from Leap) and Kinect (from Microsoft) are two examples of how novel interactive systems are entering the consumer market.

However, current displays are at best stereo-/auto-stereoscopic displays which provide limited viewing experiences. These will give way to novel technologies like 3D light field displays, which are capable of presenting 3D digital objects visualised floating in the air, on top of the surfaces or growing out of the wall and offering natural parallax view without any glasses or head tracking (scenes from movies like Ironman are not fiction but realizable through our technology). Future screen-less display evolution requires volumetric technologies, holographic elements and holographic projections as well as new type of natural interaction methods for human computer interaction (HCI).

On the display research side, we will advance the state of the art with the design and implementation of a screen-less 3D light-field display prototype, which has it’s screen plane in mid-air – as opposed to current light-field displays, which have half of the useful displaying area inside the screen, and only half outside the screen. As the depth range of all autostereoscopic displays is limited, it’s important not to lose half of this range when it comes to natural hand-based interaction. While light-field displays have a considerably wide Field Of Depth (FOD, in the range of 15cm .. 1.5m both inside and outside the screen), still doubling the effective interaction space has definite advantages. The prototype will thus allow free-hand manipulation of objects both inside and outside the “screen plane” that is, the center of projection where the display has its maximal spatial resolution.

The Tangible3D project will innovatively combine knowledge of novel “mid-air” display technologies, with a novel tactile system in which using ultrasound, feelable forces and tactile sensations will be projected through the air and directly onto the user. These can take the form of discrete points of feedback, a varying tactile landscape or three dimensional shapes. We will use our wave-field synthesis algorithm to generate an interference pattern that corresponds to the desired feedback or shape. Constructive areas are strong enough to be felt through vibrations in the skin while residual ultrasound is canceled elsewhere ensuring that only the desired feedback can be felt. The interference pattern is produced by a phased array of ultrasound transducers.

The most innovative aspect of this approach is that by combining the light-field synthesis technique with the wave-field synthesis technique we can algorithmically determine salient tactile feedback points for the user without knowing anything about the application context. This means our system will be able to provide users with the "best" interactive experience without having any additional information about the context of its use. The user will simply have to load the 3D data that they want to explore onto our system and the system will render it in true-3D AND allow them to feel tactile sensations in salient locations in the system.

These techniques together will form the basis of an ultra-realistic visualization and interaction system that will find its way into many technical and educational use cases.